Flying monkey TV (FMTV) has a busy weekend ahead, on Friday I will be making some micro-docs and mini-promos for the forthcoming Castlegate Festival on the 20th & 21st June. Friday is the set-up day and not open to the public, but I will be interviewing the artists and shooting the set-up and environs, and making a few short videos about the activities to go online before the event.

On Saturday 20th I will be joining the People’s Assembly End Austerity Now public demonstration in London, and documenting that in whatever way I can. I am not planning any editing on-the-fly, but might publish a few clips from my iPhone. Other than the iPhone 5s, I will be taking a basic GoPro Hero, possibly a Canon EOS 550D and the RODE SmartLav+ mics. I have improvised a couple of wrist straps for the GoPro & iPhone and will also be taking a 12,000 mAh NiMH battery to recharge the cameras throughout the day. I don’t have any specific agenda for my shooting and have no official role, so I’m just going to see what happens.

On Sunday 21st I will be back in Castle House in Sheffield to join the Castlegate Festival and will be on-site throughout the day to talk to people about FMTV and how I go about making videos.

The most important aspect of this activity for FMTV is the workflow. The whole point of FMTV is to use easily available technologies (that is, not professional equipment), but still achieve good quality and get it published quickly. Sure, we can all shoot a bit of smartphone video and get it online very quickly, but the aim of FMTV is to make is as near-broadcast quality as is reasonably possibly.

The smallest hurdles to making half-decent TV are at the beginning and the end, that is the capture and the publishing. The world is awash with cheap, high-quality cameras and I have more online video capacity than I can possibly fill, but it’s the workflow between the two that requires the skill, and this is where it gets more difficult.

A pair of RODE SmartLav+ mics with twn adapter, E-Prance DashCam, GoPro Hero, 12,00 mAh USB battery (with iPhone 5s in the reflection) & clip-on fish-eye lens.

Whereas there’s nothing wrong with posting informal, unedited videos online, if you have more purpose it often desirable to edit out the umms and ahhs, and the WobblyCam shots. I bought the iOS version of iMovie and have looked at it several times but, beyond basic trimming, it doesn’t do anything I want. I have no doubt it will mature, but I have decided to edit using Final Cut Pro 6 (FCP6) on an oldish MacBook. There are other video edit apps for iOS, but I don’t have an iPad yet and editing on an iPhone screen is nothing less than painful.

However, on the plus side, when I was looking for apps that would record audio from external mics whilst shooting video (another thing iMovie does not do), I bought an app called FiLMiC Pro by Cinegenix, which is superb (can’t find the price but it was only a few pounds). Unlike the built-in camera app, FiLMiC Pro allows you to create presets that store different combinations of resolution, quality and frame rate, rather than the built-in take-it-or-leave-it settings. I have found some less than complimentary reviews citing its flakiness, and I have had a few problems, but only a few. It allows you to choose where in the frame to focus and where to exposure for, and it allows you to lock both settings.

http://www.filmicpro.com/apps/filmic-pro/

So, to shoot the promos / mini-docs, my main camera will be the iPhone 5s, controlled with FiLMiC Pro, with sound for interviews from a pair of RODE SmartLav lapel mics.

http://www.rode.com/microphones/smartlav

Then I will download the video clips to the Mac and transcode them into Apple Intermediate Codec (AIC) for editing. Some apps seem to be able to edit inter-frame encoded video (such as the H264 from the iPhone), but in my experience FCP6 does not handle it well during editing. I don’t know much about the specification of AIC, and it appears to be a lossy format, but it works well for me.

For the edits that I want to get online quickly, I will master to 1280x720p 25fps using a square overlay. This is so that I can edit the videos only once, in 16:9 aspect ratio, but they will be action-safe for uploading to Instagram, which crops everything square by default. The reason that we (the Studio COCOA team) decided to make things Instagram-friendly is because it is populated by a younger audience than us middle-aged Twitter freaks, and is appropriate for the the activities taking place over the weekend.

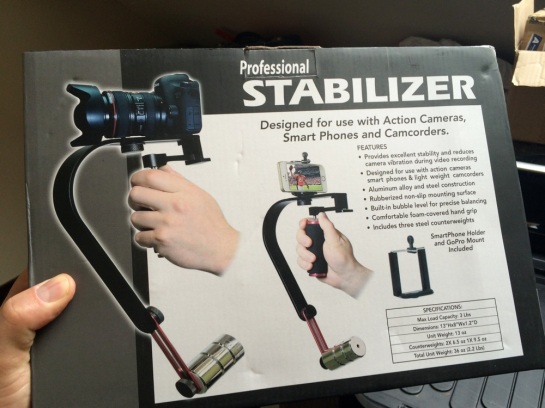

I also bought a stabilizer mount, not specifically for this job, but it was very cheap and comes with a smartphone mount, and this might correct some of the unintended WobblyCam shots when operating such a small camera. I also intend to experiment a bit with some other features of the iPhone 5s’ superb built-in camera, such as the panoramic still capture and the 120 fps slow-motion video capture. I also have a clip-on fish-eye lens (which fits any smartphone) that is remarkably good.

And finally, I will also be capturing timelapse, as I usually do, using CHDK-hacked Canon Powershot cameras, and will also try out the timelapse function on the GoPro Hero.

http://chdk.wikia.com/wiki/CHDK

Stand by:

https://instagram.com/bolam360

https://twitter.com/RBDigiMedia

http://flyingmonkey.tv

#CastlegateFest

https://twitter.com/castlegatefest

http://cocoartists.co/